Don't want to read so much#

The article explains how to make users enjoy modified static resources in a timely manner when using forced caching. There are several solutions:

- Modify the resource file name, such as:

custom-v1.css - Modify the resource file name and generate a hash value for the file content (the hash changes if the content changes, otherwise it remains unchanged) (index.v1tg6l.css)

- Add request parameters to the resource (this parameter has no effect, it is just to modify the URL address) and generate a hash value similar to the second option (index.css?v=qb6l0p)

Main Text#

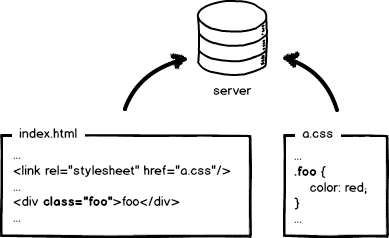

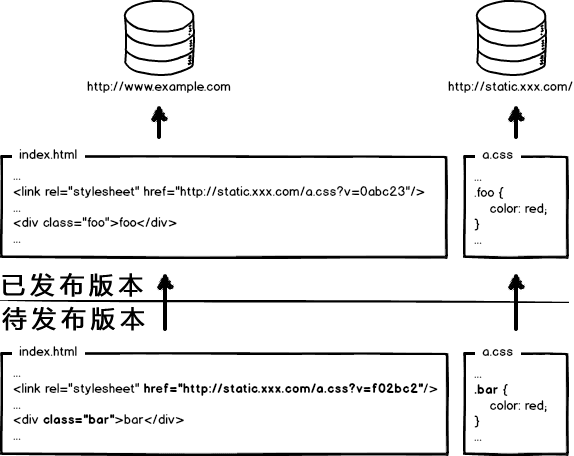

Let's go back to the basics and start with the original front-end development. The image above is a "cute" index.html page and its style file a.css. Write code with a text editor, no need to compile, preview locally, confirm OK, throw it on the server, and wait for users to visit. Front-end development is so simple and fun, the threshold is so low, you can learn it in minutes!

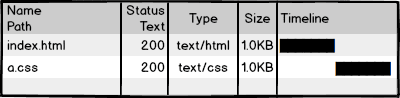

Let's go back to the basics and start with the original front-end development. The image above is a "cute" index.html page and its style file a.css. Write code with a text editor, no need to compile, preview locally, confirm OK, throw it on the server, and wait for users to visit. Front-end development is so simple and fun, the threshold is so low, you can learn it in minutes!  Then we visit the page, see the effect, and then check the network request, 200! Not bad, perfect! So, is the development complete? Wait, it's not over yet! For large companies, those abnormal traffic and performance indicators will make front-end development not "fun" at all. Take a look at the request for a.css. If it needs to be loaded every time a user visits the page, it will affect performance and waste bandwidth. We hope it can be like this:

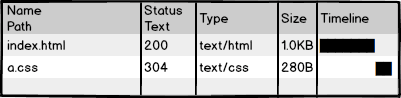

Then we visit the page, see the effect, and then check the network request, 200! Not bad, perfect! So, is the development complete? Wait, it's not over yet! For large companies, those abnormal traffic and performance indicators will make front-end development not "fun" at all. Take a look at the request for a.css. If it needs to be loaded every time a user visits the page, it will affect performance and waste bandwidth. We hope it can be like this:  Use 304 to make the browser use local cache. But is this enough? No! 304 is called conditional caching, which still requires communication with the server. Our optimization level is abnormal, so we must completely eliminate this request and make it like this:

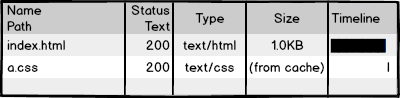

Use 304 to make the browser use local cache. But is this enough? No! 304 is called conditional caching, which still requires communication with the server. Our optimization level is abnormal, so we must completely eliminate this request and make it like this:  Force the browser to use local cache (cache-control/expires) and avoid communication with the server. Okay, the optimization of requests has reached an abnormal level, but here comes the problem: if you don't allow the browser to send resource requests, how do you update the cache? Very well, someone has come up with a solution: by updating the paths of referenced resources in the page, the browser will actively abandon the cache and load new resources. It seems like this:

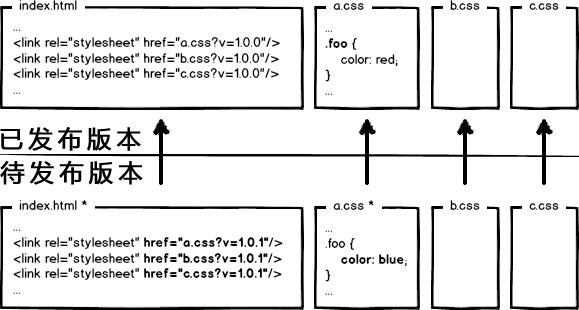

Force the browser to use local cache (cache-control/expires) and avoid communication with the server. Okay, the optimization of requests has reached an abnormal level, but here comes the problem: if you don't allow the browser to send resource requests, how do you update the cache? Very well, someone has come up with a solution: by updating the paths of referenced resources in the page, the browser will actively abandon the cache and load new resources. It seems like this:  Next time you go online, change the link address to the new version, and the resources will be updated. Is the problem solved?! Of course not! The abnormality of large companies continues. Consider this situation:

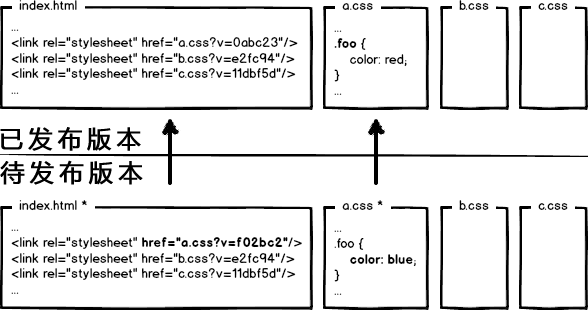

Next time you go online, change the link address to the new version, and the resources will be updated. Is the problem solved?! Of course not! The abnormality of large companies continues. Consider this situation:  The page references 3 CSS files, and only a.css was changed in a certain release. If all links are updated, it will cause the cache of b.css and c.css to become invalid, resulting in waste. Restart the abnormal mode, and we will find that to solve this problem, the modification of the URL must be associated with the file content. In other words, only when the file content changes will the corresponding URL change, thus achieving precise cache control at the file level. What is related to the file content? We naturally think of using a data digest algorithm to calculate the digest information of the file. The digest information corresponds to the file content one-to-one, providing a basis for precise cache control at the individual file level. Okay, let's change the URL to include the digest information:

The page references 3 CSS files, and only a.css was changed in a certain release. If all links are updated, it will cause the cache of b.css and c.css to become invalid, resulting in waste. Restart the abnormal mode, and we will find that to solve this problem, the modification of the URL must be associated with the file content. In other words, only when the file content changes will the corresponding URL change, thus achieving precise cache control at the file level. What is related to the file content? We naturally think of using a data digest algorithm to calculate the digest information of the file. The digest information corresponds to the file content one-to-one, providing a basis for precise cache control at the individual file level. Okay, let's change the URL to include the digest information:  Now, if there is a file modification, only the URL corresponding to that file will be updated. This seems to be perfect. Is this enough? Big companies tell you: not at all! Sigh~~~ To let me catch my breath, modern internet companies, in order to further improve website performance, will deploy static resources and dynamic web pages separately. Static resources will be deployed on CDN nodes, and the resources referenced in the web page will also be changed to the corresponding deployment path:

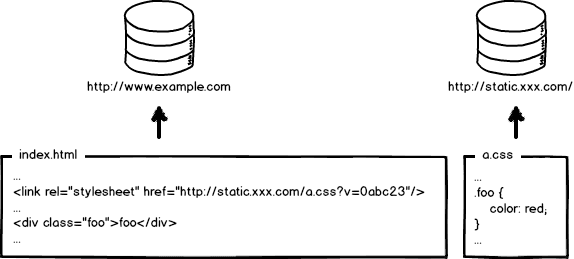

Now, if there is a file modification, only the URL corresponding to that file will be updated. This seems to be perfect. Is this enough? Big companies tell you: not at all! Sigh~~~ To let me catch my breath, modern internet companies, in order to further improve website performance, will deploy static resources and dynamic web pages separately. Static resources will be deployed on CDN nodes, and the resources referenced in the web page will also be changed to the corresponding deployment path:  Okay, when I need to update static resources, I will also update the references in the HTML, just like this:

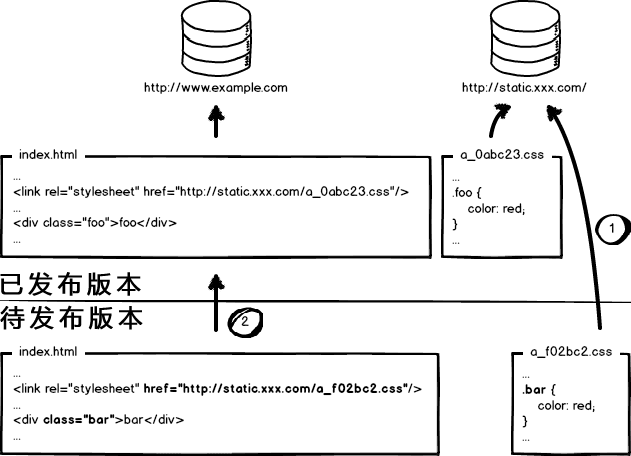

Okay, when I need to update static resources, I will also update the references in the HTML, just like this:  In this release, both the page structure and style were changed, and the URLs corresponding to the static resources were also updated. Now, when deploying the code, dear front-end developers, tell me, should we deploy the page first or the static resources first? Deploy the page first, then deploy the resources: during the time interval between the two deployments, if a user visits the page, the old resources will be loaded in the new page structure, and this old version of the resources will be cached as the new version until the resource cache expires. The result is that the user sees a page with messed up styles, and unless they manually refresh, the page will continue to display incorrectly until the resource cache expires. Deploy the resources first, then deploy the page: within the deployment time interval, if a user with a local cache of the old version resources visits the website, the browser will directly use the local cache because the requested page is the old version and the resource reference has not changed. In this case, the page will be displayed correctly. However, for users without a local cache or with an expired cache, the old version of the page will load the new version of the resources, causing the page to execute incorrectly. But when the page is fully deployed, these users will be able to access the page normally again. Okay, the analysis above is to say that deploying either first will cause page distortion during the deployment process. So, for projects with low traffic, you can let the developers suffer a bit and secretly go online late at night, deploy the static resources first, and then deploy the page. This way, the problem will be minimized. However, for large companies, there is no such thing as an "absolute low peak" period, only a "relatively low peak" period. So, in order to provide stable service, we must continue to pursue perfection! This strange problem originated from the "overwriting" release of resources, using the "to be released" resources to overwrite the "already released" resources, resulting in this problem. The solution is simple, implement a "non-overwriting" release.

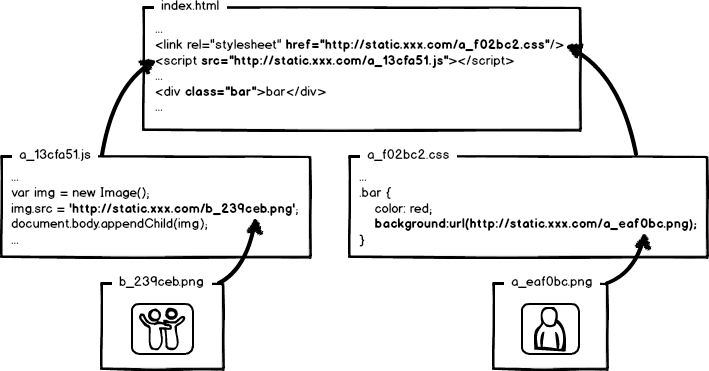

In this release, both the page structure and style were changed, and the URLs corresponding to the static resources were also updated. Now, when deploying the code, dear front-end developers, tell me, should we deploy the page first or the static resources first? Deploy the page first, then deploy the resources: during the time interval between the two deployments, if a user visits the page, the old resources will be loaded in the new page structure, and this old version of the resources will be cached as the new version until the resource cache expires. The result is that the user sees a page with messed up styles, and unless they manually refresh, the page will continue to display incorrectly until the resource cache expires. Deploy the resources first, then deploy the page: within the deployment time interval, if a user with a local cache of the old version resources visits the website, the browser will directly use the local cache because the requested page is the old version and the resource reference has not changed. In this case, the page will be displayed correctly. However, for users without a local cache or with an expired cache, the old version of the page will load the new version of the resources, causing the page to execute incorrectly. But when the page is fully deployed, these users will be able to access the page normally again. Okay, the analysis above is to say that deploying either first will cause page distortion during the deployment process. So, for projects with low traffic, you can let the developers suffer a bit and secretly go online late at night, deploy the static resources first, and then deploy the page. This way, the problem will be minimized. However, for large companies, there is no such thing as an "absolute low peak" period, only a "relatively low peak" period. So, in order to provide stable service, we must continue to pursue perfection! This strange problem originated from the "overwriting" release of resources, using the "to be released" resources to overwrite the "already released" resources, resulting in this problem. The solution is simple, implement a "non-overwriting" release.  As shown in the image above, rename the resource files using the digest information of the files and include the digest information in the resource file's release path. This way, when a resource with modified content is released, it becomes a new file deployed online without overwriting the existing resource files. During the deployment process, deploy all static resources first, and then gradually deploy the pages. This solves the problem relatively perfectly. Therefore, the basic requirements for optimizing static resources in large companies are: configuring a long local cache to save bandwidth and improve performance, using content digest as the basis for cache updates to achieve precise cache control, deploying static resources on CDN to optimize network requests, and implementing non-overwriting releases to achieve smooth upgrades. After completing this set of optimizations, we have a relatively complete static resource cache control solution. Moreover, it should be noted that the cache control of static resources requires this treatment in all positions where static resources are loaded in the front-end, including not only JS and CSS, but also the resource paths referenced in JS and CSS files. Since it involves digest information, the digest information of the referenced resources will also cause changes in the content of the referencing files, resulting in cascading digest changes. The rough schematic diagram is as follows:

As shown in the image above, rename the resource files using the digest information of the files and include the digest information in the resource file's release path. This way, when a resource with modified content is released, it becomes a new file deployed online without overwriting the existing resource files. During the deployment process, deploy all static resources first, and then gradually deploy the pages. This solves the problem relatively perfectly. Therefore, the basic requirements for optimizing static resources in large companies are: configuring a long local cache to save bandwidth and improve performance, using content digest as the basis for cache updates to achieve precise cache control, deploying static resources on CDN to optimize network requests, and implementing non-overwriting releases to achieve smooth upgrades. After completing this set of optimizations, we have a relatively complete static resource cache control solution. Moreover, it should be noted that the cache control of static resources requires this treatment in all positions where static resources are loaded in the front-end, including not only JS and CSS, but also the resource paths referenced in JS and CSS files. Since it involves digest information, the digest information of the referenced resources will also cause changes in the content of the referencing files, resulting in cascading digest changes. The rough schematic diagram is as follows:  Okay, so far we have quickly learned about the optimization and deployment issues related to static resource caching in front-end engineering. The next problem arises: how the heck do engineers write code?! To explain the combination of optimization and engineering, it will involve a bunch of engineering issues related to modular development, resource loading, request merging, front-end frameworks, etc. The above is just the beginning, and the essence lies in the solution. But there is too much to say, so let's slowly expand on it when we have time. Or you can go to my blog to see some of the breakdowns: fouber/blog · GitHub In short, front-end performance optimization is definitely an engineering problem!

Okay, so far we have quickly learned about the optimization and deployment issues related to static resource caching in front-end engineering. The next problem arises: how the heck do engineers write code?! To explain the combination of optimization and engineering, it will involve a bunch of engineering issues related to modular development, resource loading, request merging, front-end frameworks, etc. The above is just the beginning, and the essence lies in the solution. But there is too much to say, so let's slowly expand on it when we have time. Or you can go to my blog to see some of the breakdowns: fouber/blog · GitHub In short, front-end performance optimization is definitely an engineering problem!